I am a fourth-year direct-entry PhD student at the Institute of Information Engineering, Chinese Academy of Sciences, under the joint supervision of Professors Peng Fu, Zheng Lin, and Weiping Wang. My research interests include parameter-efficient fine-tuning and efficient training of large language models.

To date, I have published four long papers as the first author at ACL, one of which received the SAC Highlights Award (Top 1.5%).

🔥 News

- 2025.08: 🎉🎉 Our Trans-PEFT is selected for the ACL2025 SAC Highlights Award (Top 1.5%).

- 2025.08: 🎉🎉 Two papers are accepted by EMNLP 2025.

- 2025.05: 🎉🎉 Three papers are accepted by ACL 2025.

- 2024.12: 🎉🎉 One paper is accepted by AAAI 2025.

📝 Selected Publications (Full List)

🧍♂️ First-Author Papers

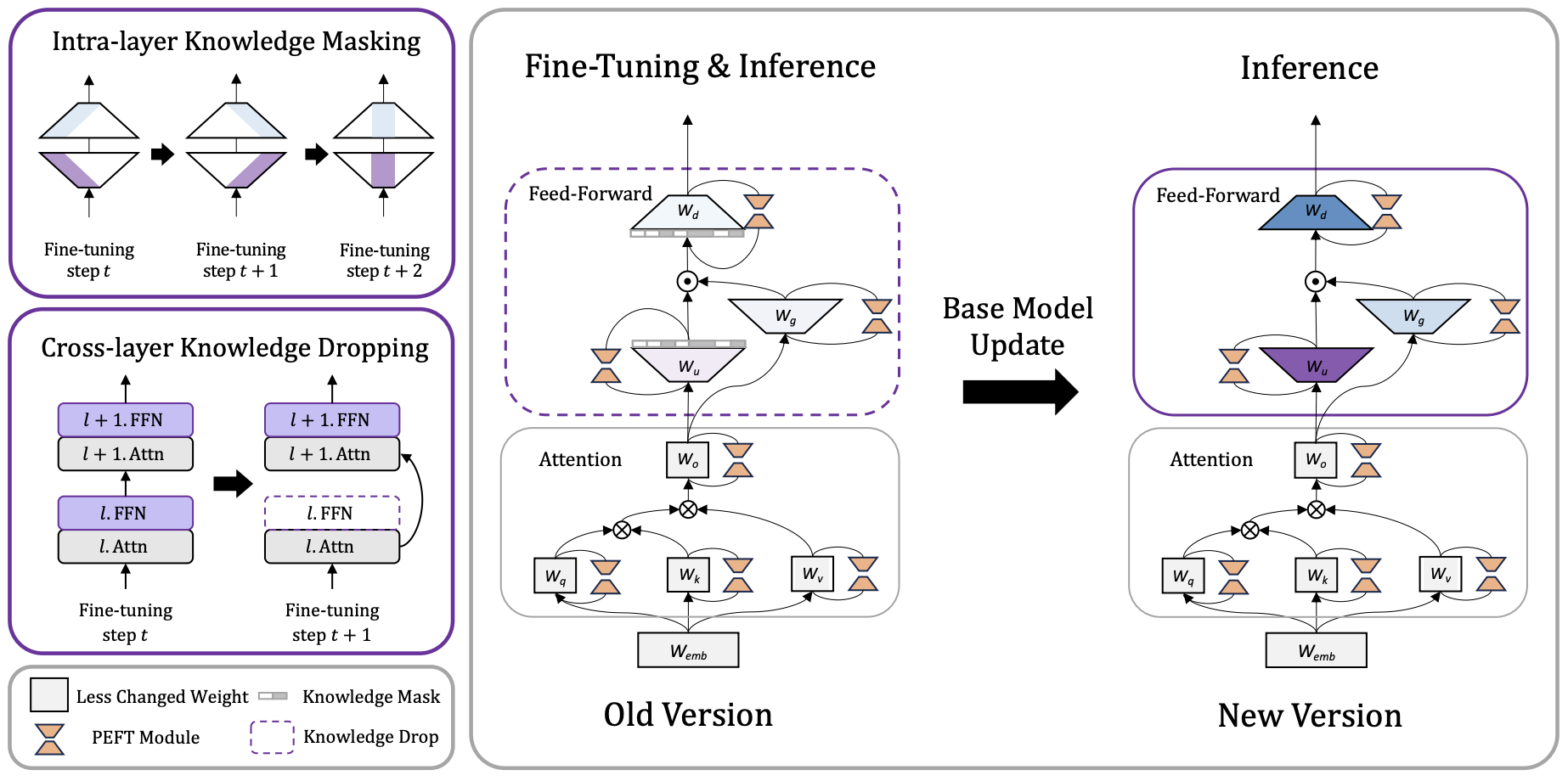

Adapt Once, Thrive with Updates: Transferable Parameter-Efficient Fine-Tuning on Evolving Base Models (SAC Highlights Award, Top 1.5%)

Naibin Gu, Peng Fu, Xiyu Liu, Ke Ma, Zheng Lin, Weiping Wang

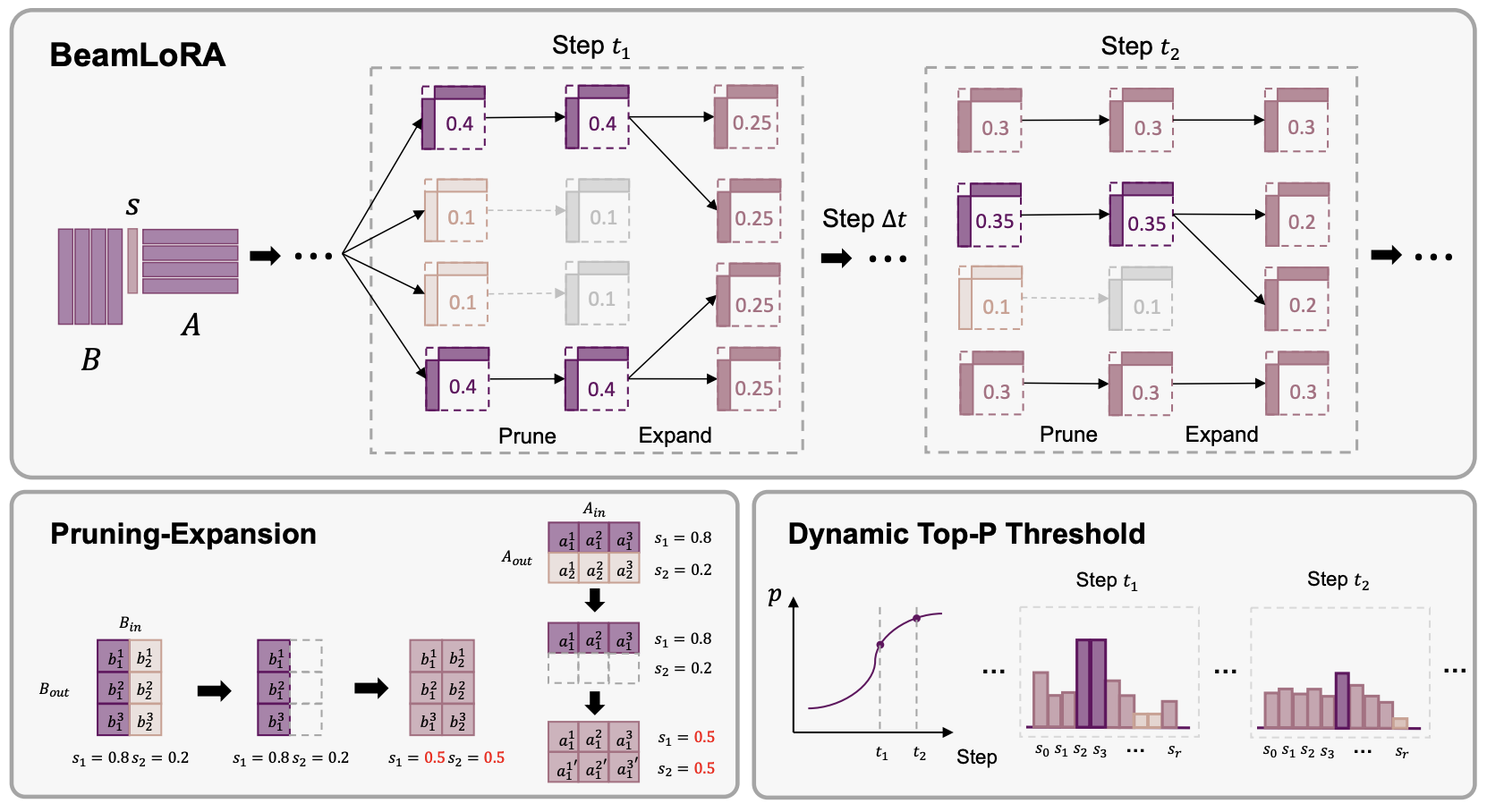

BeamLoRA: Beam-Constraint Low-Rank Adaptation

Naibin Gu, Zhenyu Zhang, Xiyu Liu, Peng Fu, Zheng Lin, Shuohuan Wang, Yu Sun, Hua Wu, Weiping Wang, Haifeng Wang

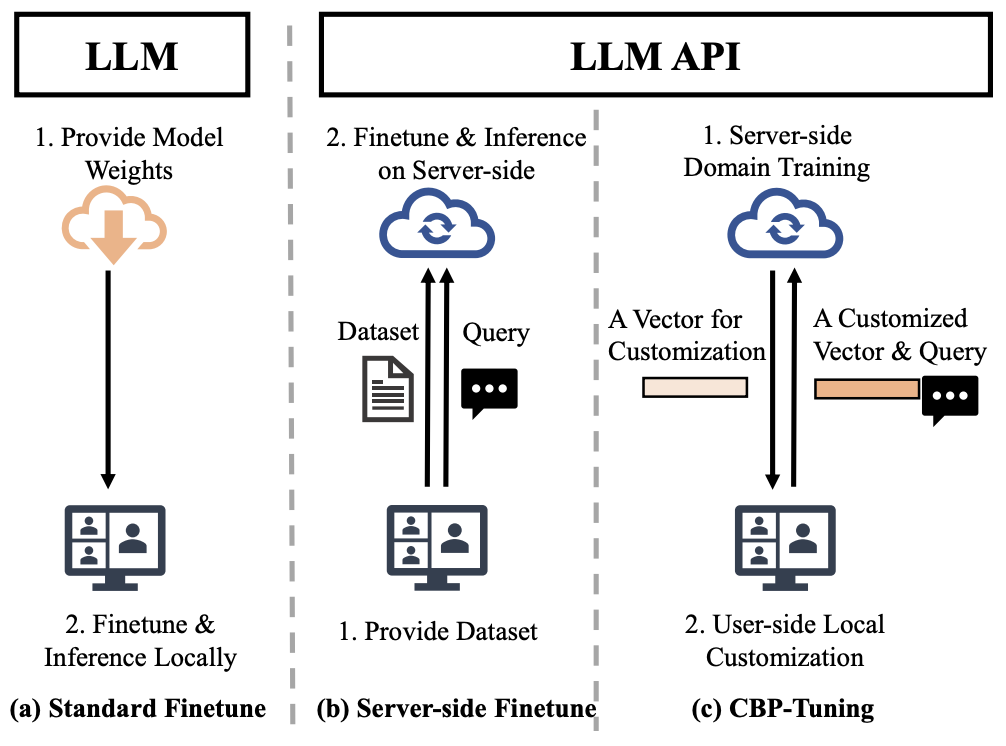

CBP-Tuning: Efficient Local Customization for Black-box Large Language Models

Jiaxuan Zhao*, Naibin Gu*, Yuchen Feng, Xiyu Liu, Peng Fu, Zheng Lin, Weiping Wang

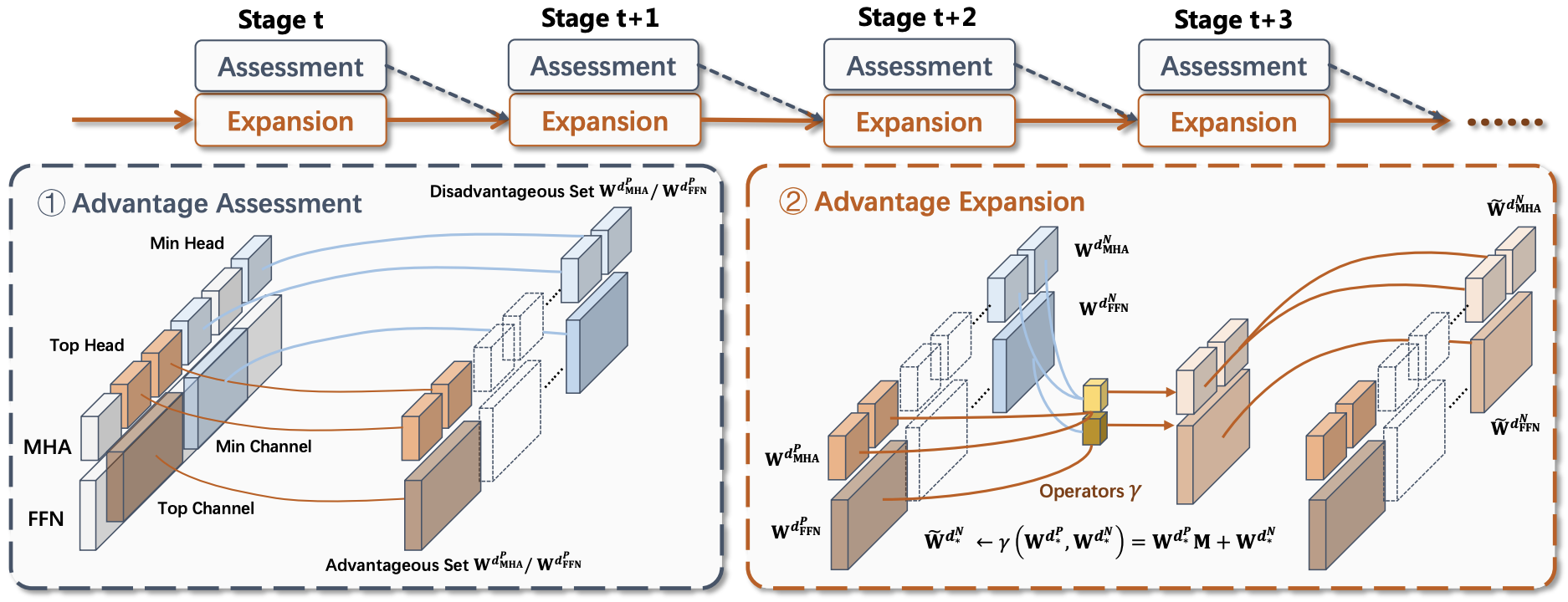

Advantageous Parameter Expansion Training Makes Better Large Language Models

Naibin Gu, Yilong Chen, Zhenyu Zhang, Peng Fu, Zheng Lin, Shuohuan Wang, Yu Sun, Hua Wu, Weiping Wang, Haifeng Wang

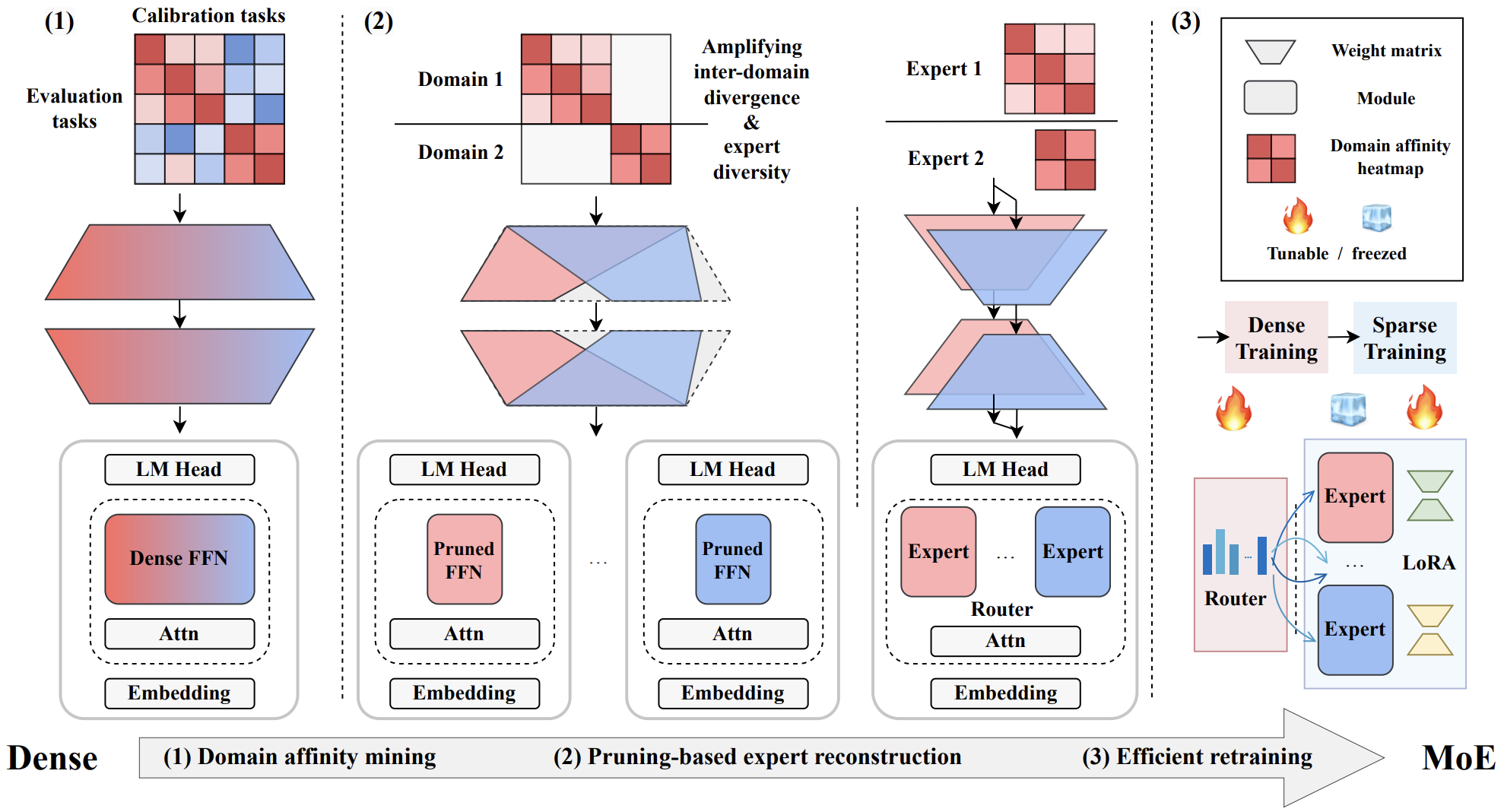

Elastic MoE: Unlocking the Inference-Time Scalability of Mixture-of-Experts

Naibin Gu, Zhenyu Zhang, Yuchen Feng, Yilong Chen, Peng Fu, Zheng Lin, Shuohuan Wang, Yu Sun, Hua Wu, Weiping Wang, Haifeng Wang

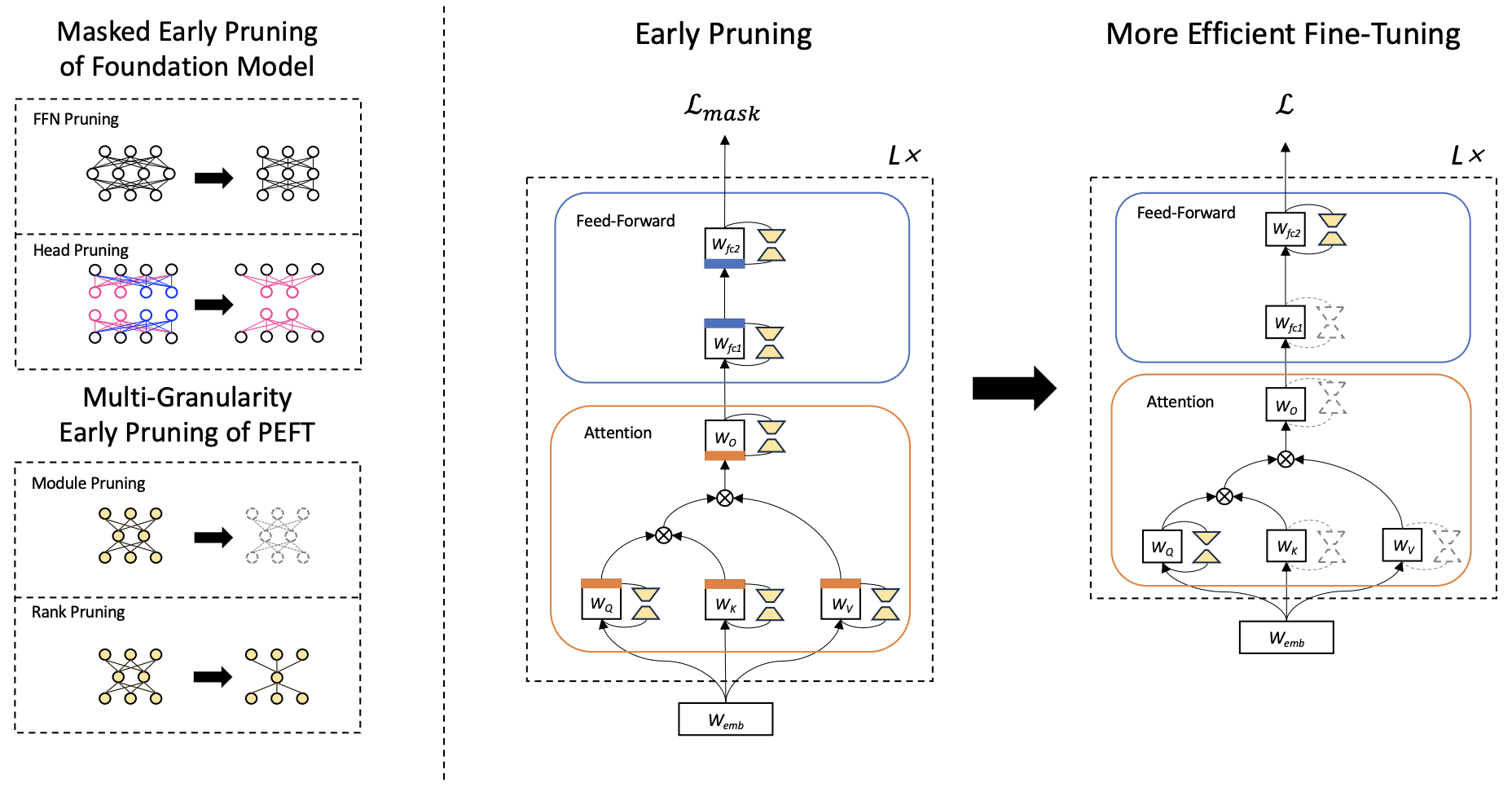

Light-PEFT: Lightening Parameter-Efficient Fine-Tuning via Early Pruning

Naibin Gu, Peng Fu, Xiyu Liu, Bowen Shen, Zheng Lin, Weiping Wang

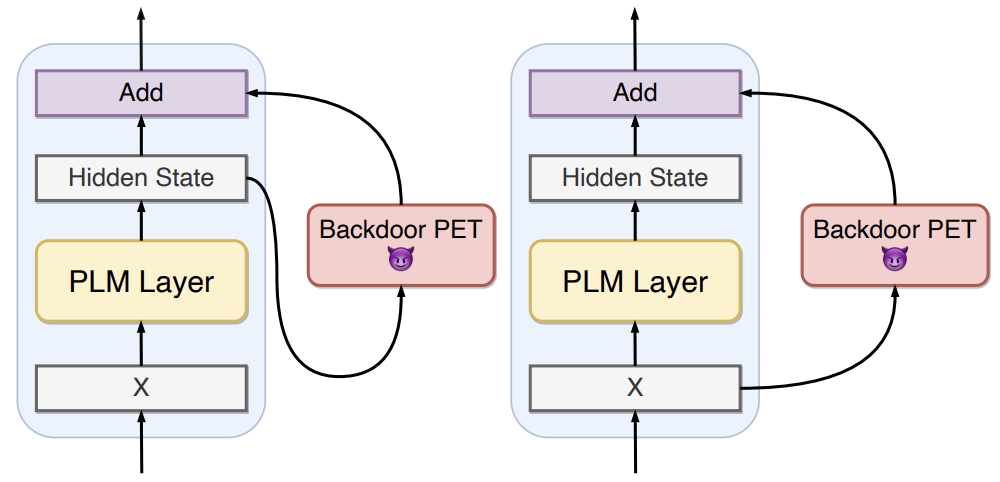

A Gradient Control Method for Backdoor Attacks on Parameter-Efficient Tuning

Naibin Gu, Peng Fu, Xiyu Liu, Zhengxiao Liu, Zheng Lin, Weiping Wang

👬 Co-authored Papers

Yuchen Feng, Bowen Shen, Naibin Gu, Jiaxuan Zhao, Peng Fu, Zheng Lin, Weiping Wang

Xiyu Liu, Zhengxiao Liu, Naibin Gu, Zheng Lin, Wanli Ma, Ji Xiang, Weiping Wang

📖 Educations

- Sept. 2022 - Now: PhD student, Institute of Information Engineering (IIE), CAS & UCAS.

- Sept. 2018 - Jun. 2022: Bachelor of Computer Science, China Agricultural University.

💻 Internships

- 2025.06 - Now, ERNIE Star Top Talent Research Intern at the NLP Department, Baidu Inc. Mentor: Haifeng Wang and Zhenyu Zhang

- 2024.10 - 2025.05, Research Intern at the NLP Department, Baidu Inc. Mentor: Zhenyu Zhang